Storage protocols comparison – Fibre Channel, FCoE, Infiniband, iSCSI?

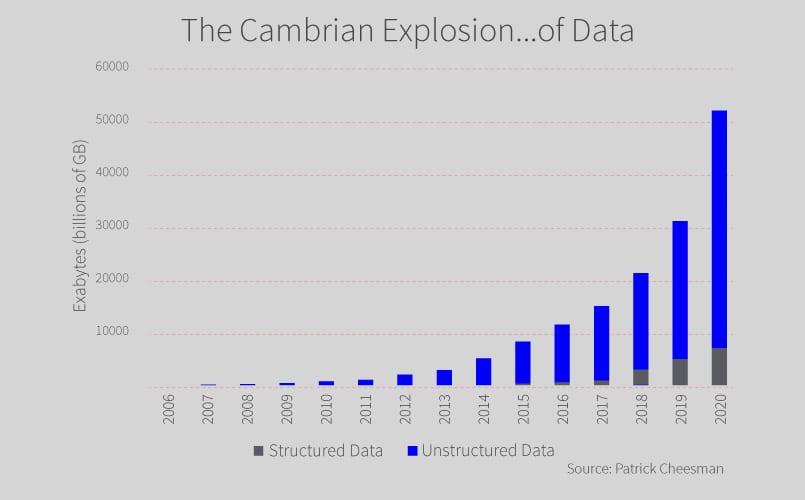

Data is Growing:

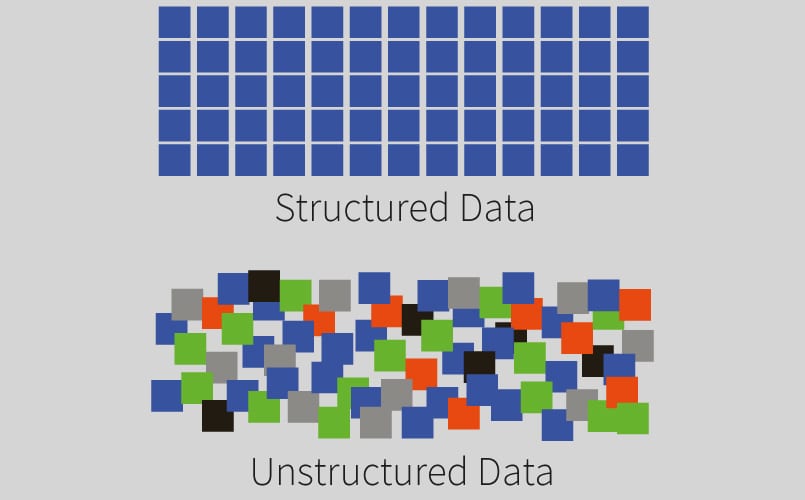

The amount of digital data in the universe is growing at an exponential rate, doubling every two years, and changing how we live in the world. If we look at data we can divide them in: Structured Data (highly organized and made up mostly of tables with rows and columns that define their meaning, like Excel spreadsheet and relational databases) and Unstructured Data (Books, letters, audio, video files, power points, images…)

The volume of unstructured data has exploded in the past decade:

The growth of structured versus unstructured data over the past decade shows that unstructured data accounts for more than 90% of all data. “Between the dawn of civilization and 2003, we only created five exabyte’s; now we’re creating that amount every two days. By 2020, that figure is predicted to sit at 53 zettabytes (53 trillion gigabytes) — an increase of 50 times.” — Hal Varian, Chief Economist at Google. There is good example for it even in traditional industries like Energy as industry is changing. Below is a quote from General Electric vice-president of the software center: “The amount of data generated by sensor networks on heavy equipment is astounding. A day’s worth of real-time feeds on Twitter amounts to 80GB. One sensor on a blade of a turbine generates 520GB per day and you have 20 of them.” These sensors produce real-time big data that enables GE to manage the efficiency of each blade of every turbine in a wind farm.

Data needs to be stored:

As we have these large data amounts it becomes very important which technology we choose for storing the data. There are about 3 important parameters which will have large impact on how fast we will access our stored data:

1. Bandwidth – a measure of the data transfer rate, MB/s;

2. Latency – time taken to complete I/O request, also known as a response time. Usually measured in milliseconds (1/1000 of a second). Going forward in microseconds (1/1000 of a millisecond);

3. IOPS – Input/output operations per second (IOPS, pronounced eye-ops) is an input/output performance measurement used to characterize computer storage devices like hard disk drives (HDD), solid state drives (SSD), and storage area networks (SAN);

Two other parameters every data-center care about are: power consumption and reliability.

Storage protocol comparison – Fibre Channel, FCoE, Infiniband, iSCSI:

There are several type of storage protocols to choose from and based on this choice will largely depend our networking parameters, what type of network infrastructure we are going to have, even what brand switches and routers we are more likely to see in our data-center and what cabling type we will have in it.

Below would be the summary about possible choices and a bit deeper look in each one of them:

| Attribute | Infiniband | Fibre Channel | FCoE | iSCSI |

|---|---|---|---|---|

| Bandwidth (Gbps) | 2.5/5/10/14/25/50 | 8/16/32/128 | 10/25/40/100 | 10/25/40/100 |

| Adapter Latency* | 25us | 50us | 200us | Wide range |

| Switch latency per hop* | 100-200 ns | 700 ns | 200 ns | 200 ns |

| Adapter | HCA – host channel adapter | HBA – host bus adapter | CNA – converged network adapter | NIC – network interface card |

| Switch Brands | Mellanox, Intel | Cisco, Brocade | Cisco, Brocade | HPE, Cisco, Brocade |

| Interface Card Brands | Mellanox, Intel | Qlogic, Emulex | Qlogic, Emulex | Intel, Qlogic |

| Deployment amount | X | XX | X | XXX |

| Reliability | XX | XXX | XX | XX |

| Ease of management | X | XX | XX | XXX |

| Future upgrade path | XX | XXX | XX | XXX |

*Please note as we didn’t had a chance to get above adapter latency figures and switch latency per hop figures from neutral source they are a bit relative, but can give approximate idea about each protocol performance in this area.

x – Low

xx – Medium

xxx – High

InfiniBand (IB)

InfiniBand (IB) is one of latest computer-networking communications standard used in high-performance computing that features very high throughput and very low latency and it most commonly used to interconnect supercomputers. Mellanox and Intel are two main manufacturers of InfiniBand host bus adapters and network switches. Intel jumped into this game by acquiring Qlogic InfiniBand division and Cray’s HPC interconnect business.

Speeds vary from 2.5Gbit/s (SDR) up to 50Gbit/s (HDR) and can as well be combined in up to 12 links where we can get 600 Gbit/s connection, for more info please see table below:

| SDR | DDR | QDR | FDR10 | FDR | EDR | HDR | NDR | XDR | |

|---|---|---|---|---|---|---|---|---|---|

| Adapter latency (microseconds) | 5 | 2.5 | 1.3 | 0.7 | 0.7 | 0.5 | |||

| Signaling rate (Gbit/s) | 2.5 | 5 | 10 | 10.3125 | 14.0625 | 25.78125 | 50 | 100 | 250 |

| Speeds for 4x links (Gbit/s) | 8 | 16 | 32 | 40 | 54.54 | 100 | 200 | ||

| Speeds for 8x links (Gbit/s) | 16 | 32 | 64 | 80 | 109.08 | 200 | 400 | ||

| Speeds for 12x links (Gbit/s) | 24 | 48 | 96 | 120 | 163.64 | 300 | 600 | ||

| Year | 2001 | 2005 | 2007 | 2011 | 2011 | 2014 | 2017 | after 2020 | future |

For Infiniband interconnection we can use DAC cables, AOCs, optical transceivers and we have ability to use DAC and AOC breakout cables.

| Infiniband | Speed | Form Factor | DAC | AOCs | Optical transceivers | DAC breakout | AOC breakout |

|---|---|---|---|---|---|---|---|

| HDR | 200Gb/s | QSFP56 | Y | Y | Y | Y | Y |

| EDR | 100Gb/s | QSFP28 | Y | Y | Y | ||

| FDR | 56Gb/s | QSFP+ | Y | Y | Y | ||

| FDR10 QDR | 40Gb/s | QSFP+ | Y | Y | Y | ||

| QDR | 40Gb/s | QSFP+ | Y | Y | Y | ||

| Distance | 0.5m to 7m | 3m to 300m | 30m to 10km | 1-2.5m | 3m to 30m |

Fibre Channel (FC)

Fibre Channel (FC) became the leading choice for SAN networking during the mid-1990s. Traditional Fibre Channel networks contain special-purpose hardware called Fibre Channel switches that connect the storage to the SAN plus Fibre Channel HBAs (host bus adapters) that connect these switches to server computers. Fibre Channel is a mature low-latency, high-bandwidth, high-throughput protocol. As a storage protocol, FC is simple to configure and administer, and has had wide adoption over the past 18 years.

Fiber channel speeds have evolved and in nowadays 16GFC, 32GFC and 128GFC speeds are supported in modern FC switches and HBAs and 256GFC and 512GFC speeds are in roadmap.

| Name | Line-Rate Gigabyte | Available Since |

|---|---|---|

| 1GFC | 1.0625 | 1997 |

| 2GFC | 2.125 | 2001 |

| 4GFC | 4.25 | 2004 |

| 8GFC | 8.5 | 2005 |

| 10GFC | 10.51875 | 2008 |

| 16GFC | 14.025 | 2011 |

| 32GFC | 28.05 | 2016 |

| 128GFC | 28.05×4=112.2 | 2016 |

| 256GFC | 2021 | |

| 512GFC | 2024 |

If we check then mainstream brands for Fibre Channel switch technology are Cisco, Brocade, and mainstream brands for HBAs are Qlogic and Emulex. There are as well Dell and Lenovo FC switches, but these are Brocade OEM equipment.

For FC switch and HBAs interconnection we use optical modules. Here below would be very useful material for all Brocade FC switch users about Brocade Fibre Channel Transceiver Support Matrix.

We can see that Brocade support FC modules with SWL (Short Wavelength up to 300m), LWL (Long Wavelength, up to 10km) and ELWL (Extended Long Wavelength up to 25km). EDGE Optical Solutions have ability to offer not only standard modules but as well hybrid solutions which will support longer distances (for example up to 100km for 8G FC) and as we 8G/16G FC CWDM and DWDM types which can be very useful for data center interconnection. Another good interconnect resource if you have Cisco based FC infrastructure: Cisco MDS 9000 Family Pluggable Transceivers Data Sheet. Here we can see the relationship between Cisco FC platforms and available Fibre Channel Transceivers for it.

Fibre Channel over Ethernet (FCoE)

An alternative form of FC called Fibre Channel over Ethernet (FCoE) was developed to lower the cost of FC solutions by eliminating the need to purchase HBA hardware. FCoE is FC over Lossless Ethernet.

Fibre Channel required three primary extensions to deliver the capabilities of Fibre Channel over Ethernet networks:

- Encapsulation of native Fibre Channel frames into Ethernet Frames.

- Extensions to the Ethernet protocol itself to enable an Ethernet fabric in which frames are not routinely lost during periods of congestion.

- Mapping between Fibre Channel N_port IDs (aka FCIDs) and Ethernet MAC addresses.

The key advantage for FCoE is ability to use Ethernet cabling for datacenter network and storage traffic which removes the need for Fibre Channel fabric and infrastructure. It reduces the amount of cables as well number of interface cards required. Instead of separate Ethernet network cards and Fibre Channel host bus adapters (HBA), the two functions will be combined onto a single converged network adapter (CNA). The CNA deals with both protocols on a single card, while allowing storage and network domains to be controlled independently.

Now many Fiber Channel switches support as well FCoE, for example Cisco Nexus series and Cisco MDS series support it same as Brocade switches support it. Cisco was one of the vendors who was pushing FCoE hard as Cisco was not as good as Brocade on FC but it had a lot of expertise in Ethernet and IP. While FCoE promises improved economics, it does not improve availability or performance relative to FC. It does add administration complexity. Another consideration is the linkage between low adoption rate and the lack of experienced FCoE administrators, service and support personnel. Adoption of FCoE has not been successful, and as initially expected by FCoE vendors, FCoE has not replaced FC.

iSCSI

iSCSI was created as a lower cost, lower performance alternative to Fibre Channel and started growing in popularity during the mid-2000s. iSCSI works with Ethernet switches and physical connections instead of specialized hardware built specifically for storage workloads. It provides data rates of 10 Gbps and higher.

iSCSI appeals especially to smaller businesses who usually do not have staff trained in the administration of Fibre Channel technology. iSCSI was pioneered by IBM and Cisco in 1998. Unlike some SAN protocols, iSCSI requires no dedicated cabling; it can be run over existing IP infrastructure. As we use Ethernet more correctly TCP there is large number of vendors, products and components from which to choose. As a result, iSCSI is often seen as a low-cost alternative to Fibre Channel. However, the performance of an iSCSI SAN deployment can be severely degraded if not operated on a dedicated network or subnet (LAN or VLAN), due to competition for a fixed amount of bandwidth. For device interconnection in iSCSI we can use 10G/40G/100G Ethernet optical modules, DACs and AOC.

Summary

Based on above analysis we can conclude that Fibre Channel is one of the best protocol for data storage network infrastructure as it’s mature, low-latency, high-bandwidth it’s simple to configure and administer, as a downside could be that not many vendors from whom to choose it from, makes this technology quite expensive. This could be great technology for larger enterprises as it is purpose-built to support storage and that’s all it does.

iSCSI is as well good choice as a storage protocol as it is designed based on Ethernet, iSCSI is much less expensive than Fibre Channel. iSCSI uses standard Ethernet switches and cabling and operates at speeds of 1GB/s, 10GB/s, 40GB/s and 100GB/s. Basically, as Ethernet continues to advance, iSCSI advances right along with it. Seems iSCSI was initially designed for smaller organizations which couldn’t afford separate FC network and where willing to accept a bit lower performance for the sake of cost.. in nowadays with Ethernet advancement this could not be true anymore.

FCoE has not gone mainstream also FCoE state their solutions are less complex and more cost effective due to reduction in cabling along with the use of lower cost host adapters, FC can offer far better performance comparing to FCoE.

Infiniband is technology which can offer one of best throughput and latency parameters, but the downside would be that it’s not so widely used, administration part could be much harder than for other protocols and from cost perspective it is expensive technology.

These are our thoughts on the subject, as always you’re welcome to comment, ask questions or discuss: sales@edgeoptic.com.